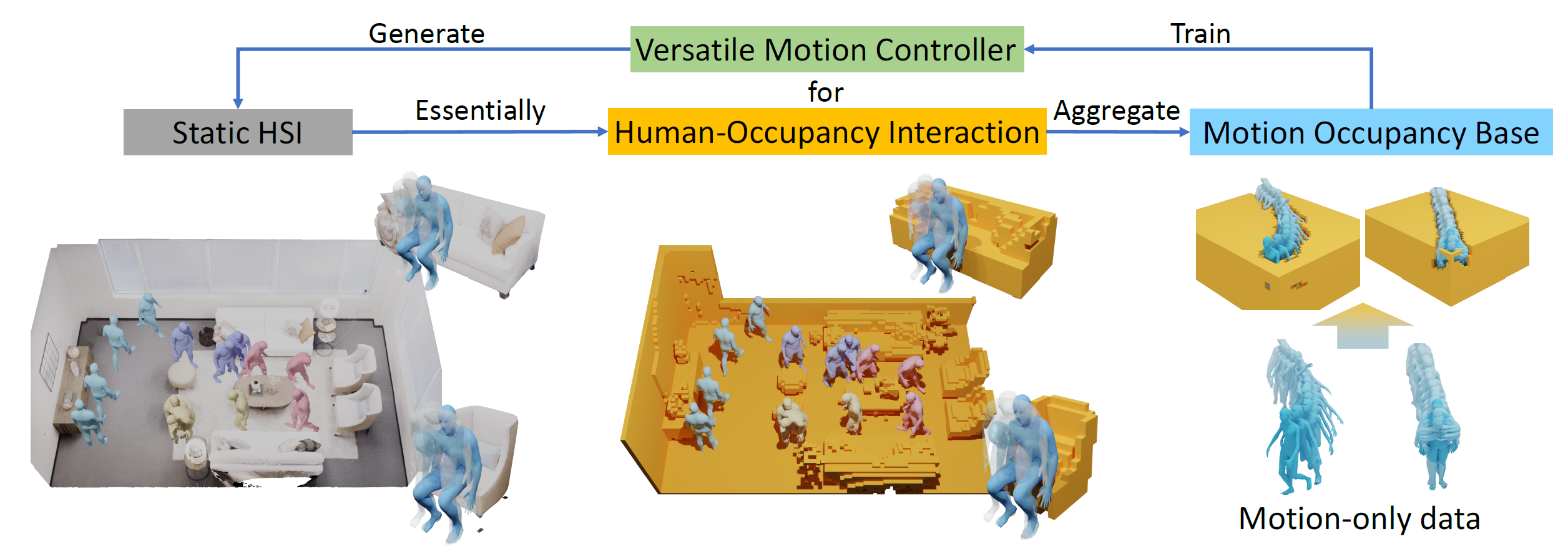

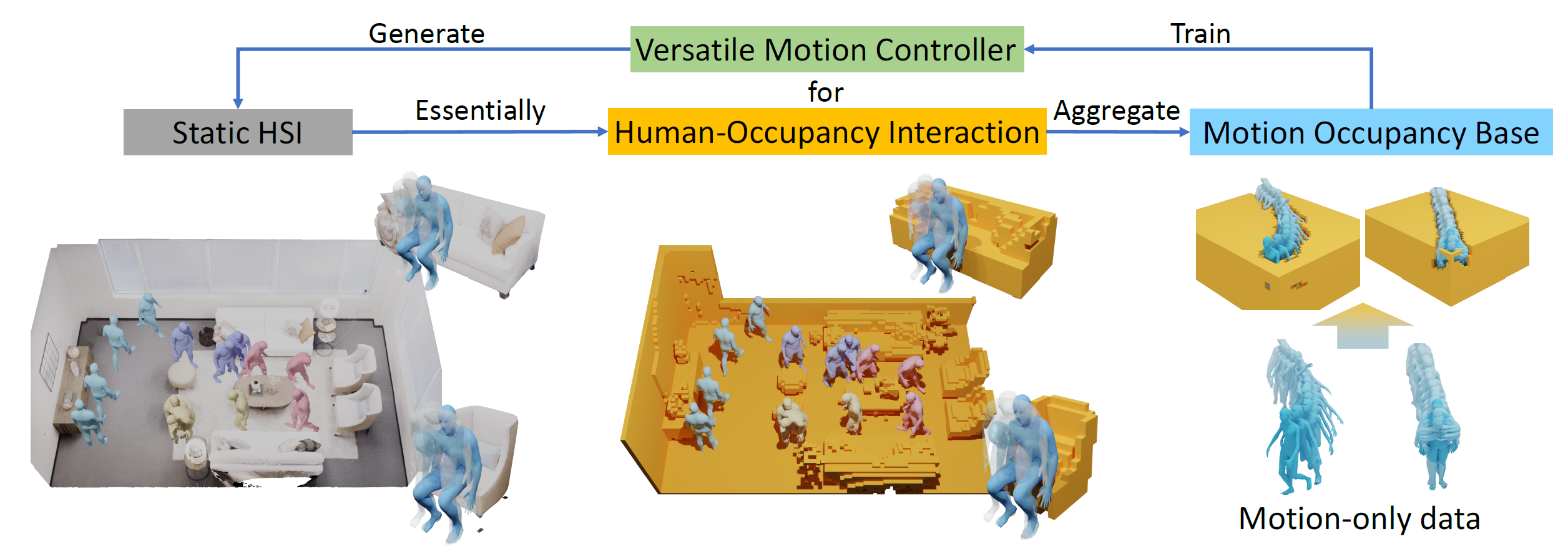

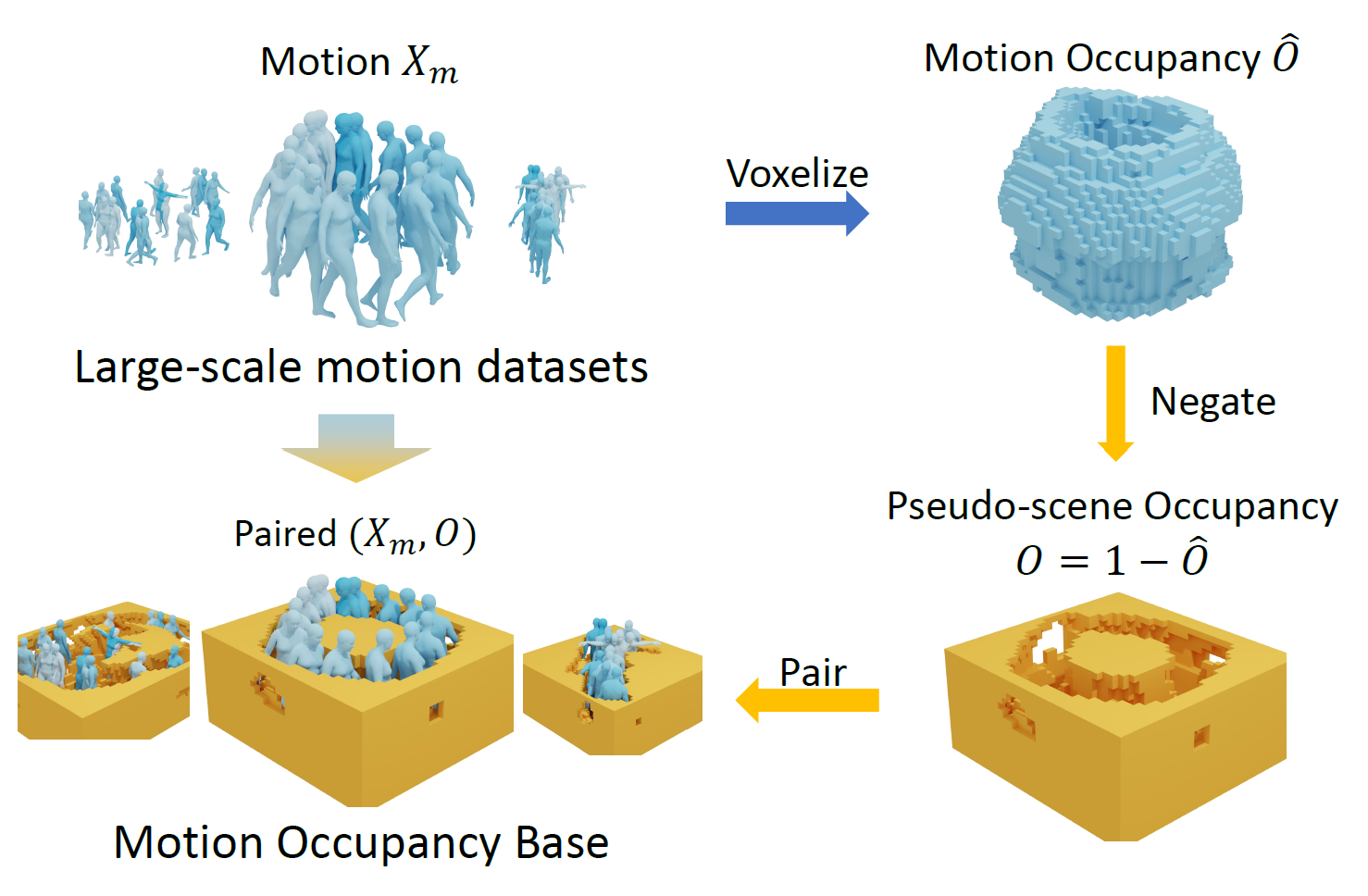

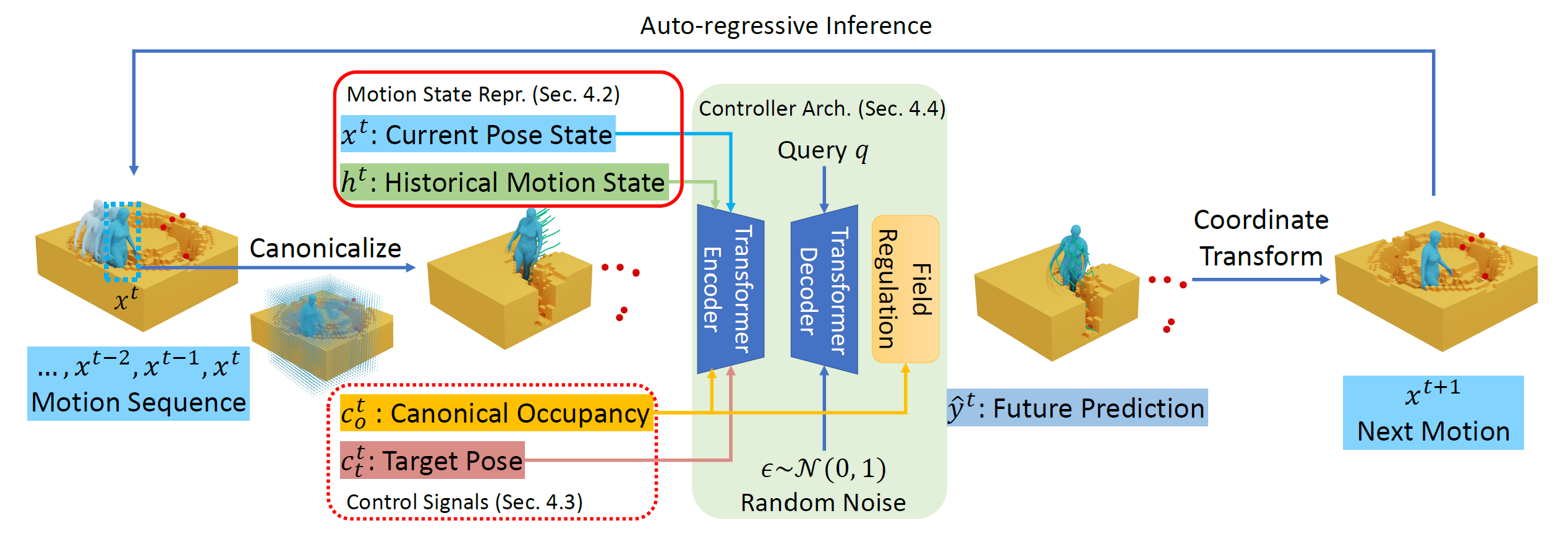

Human-scene Interaction (HSI) generation is a challenging task and crucial for various downstream tasks. However, one of the major obstacles is the limited data scale. High-quality data with simultaneously captured human and 3D environments is rare, resulting in limited data diversity and complexity. In this work, we argue that interaction with a scene is essentially interacting with the space occupancy of the scene from an abstract physical perspective, leading us to a unified novel view of Human-Occupancy Interaction. By treating pure motion sequences as records of humans interacting with invisible scene occupancy, we can aggregate motion-only data into a large-scale paired human-occupancy interaction database: Motion Occupancy Base (MOB). Thus, the need for costly paired motion-scene datasets with high-quality scene scans can be substantially alleviated. With this new unified view of Human-Occupancy interaction, a single motion controller is proposed to reach the target state given the surrounding occupancy. Once trained on MOB with complex occupancy layout, the controller could handle cramped scenes and generalize well to general scenes with limited complexity. With no GT 3D scenes for training, our method can generate realistic and stable HSI motions in diverse scenarios, including both static and dynamic scenes. Our code and data would be made publicly available.

@article{liu2023revisit,

title={Revisit Human-Scene Interaction via Space Occupancy},

author={Liu, Xinpeng and Hou, Haowen and Yang, Yanchao and Li, Yong-Lu and Lu, Cewu},

journal={arXiv preprint arXiv:2312.02700},

year={2023}

}